Introduction

Once we discussed about the basis tasks of the mixing phase, I would like to add some additional notes that can help you (they helped me) to achieve better mixes.

How to enhance your mixes?

Paradoxically, some of the basic things that help to achieve better mixes have nothing to see with plugins or outboard gear.

1. To accoustically conditionate your Room

There is no way to avoid it, believe me. You can think about this all you want but, without a minimally conditioned room where to mix, where you can remove reverberation, echoes, resonances and other things that the own room sums or rest to the sound you are mixing, there is no way to achieve a good mix that can be transported to other systems or environments, out of your mixing room.

Shape and dimensions of your room, the furniture, decorative elements, walls, building materials, windows, doors, etc... everything summed together, generate certain anomalies that alter the way your mix is being reproduced through your monitors.

Plain surfaces are highly reflexive and, the sound bounces from side to side, creating some anomalies related to echo and reverberation. This anomalies are creating an effect called comb filter.

Inside square or rectangular rooms, the corners trap the bass frequencies that enter in resonance, dramatically affecting the reproduction of your mix.

These resonance frequencies due to the dimensions of the room are better known as room modes.

There are some software solutions that solve some of the issues, like IK Multimedia's ARC System, analyzing how the reproduced sound deviates from the source sound, allowing to create a correction curve that equalizes the output in a way that "removes" most of the room issues.

But, even that such kind of software helps a lot (even just to visualize in which frequencies are you having the issues), it isn't the definitive solution, just a compromised solution.

Only Bass Traps can really get rid of the issues with low frequencies.

Some other issues, as the fluttering echo, among others, aren't solved with a simple corrective equalization.

There exist some free software (as http://www.hometheatershack.com/roomeq/), that allow you to analyze the issues in your room, even using your PC Card. It's a good starting point to try to enhance your mixing environment.

To acoustically isolate a room is an operation that consist into coat the room with materials that can absorb a high amount of the sound, avoiding that the sound can leave the room and, that any external sound can enter in the room.

To isolate, what you need more is mass, that is, you need high density and thick materials. Since not all materials can absorb the same range of frequencies, usually, a solution consists in "sandwiches" of several isolant materials, including air cameras between them.

So, that idea that the "egg cardboard boxes" can isolate a room is completely wrong. Without Mass, there is no way to isolate a room.

To acoustically conditionate a room is, however, is a different thing. It consists in to correct the sonic deviations that the room introduces in our reproduction of our mix.

In this case, we use absorbent elements, that have the function to absorb or reduce (not to completely remove) the content in a certain range of frequencies. The mass, the shape and schema of its surface, its molecular structure, among many other variables, allow to filter with different percentages, different frequencies.

Standard Absorbent Panels get rid of the broad range of frequencies but, to handle low frequencies, we need Bass Traps, that require different materials, shapes and place in the room.

Apart of the absorbents, we use also diffusers, which goal is to avoid a continuous feedback of the reflexions against the wall. They are often used to correct some issue derived from reverberations and echos, helping to "dry" the room.

The typical "egg cardboard box" can be used as a diffuser (not as an isolant).

There are some few things that we can easily do as, by example, to put a massive carpet (dense, heavy) on our room's floor, to absorb floor reflexions.

We can try to put some high density foam on the corners or, some kind of high density board, to try to reduce the issues with low frequencies.

We can put some seller, with irregular sized books, CDs and any sort of irregular objects, to help to deviate reflexions, etc.

But without going for an expensive professional audio solution, maybe some kit of acoustic treatment, as the Auralex' Project 2, could be a good way to enhance our mixing room, attacking all kind of issues simultaneously.

2. Correctly place your monitors

Nearfield monitors should be placed at 30 degrees of your hearing spot. The distance between both monitors and, between the center of such a distance and the hearing spot should be the same. So, we form an equilateral triangle were the 3 vertex are: the two monitors and the hearing spot.

You could use some of those cheap laser levelers that emit two rays, with variable angle, to correct the position of your monitors.

3. Callibrate your monitors

A calibration standard means that with your volume knob at 0 dB, you should have a loudness of 83 dB SPL in your hearing spot, while reproducing Pink Noise of broad frequencies, between 500 Hz and 2 KHz, emitted with -20 dB RMS.

To measure the sound level (SPL) you will need a RTAS or SPL measurement unit (as the T.Meter MPAA1). The meter will give you the level in dB SPL of the sound projected by your monitors to your hearing spot. You should move your monitors' volume control until you read 83 dB SPL.

When you read 83 dB, mark that volume control position with a zero (0) value.

To emit a signal of Pink Noise, with a frequencies range between 500 Hz and 2 KHz you will need, or a plugin able to reproduce such a noise or, to download some file containing the proper material for this calibration.

In Pro Tools, you can use the plugin called Sound Generator, in an instrument track, to generate the Pink Noise at -20 dB, with all the bandwith. After such a plugin, you can put a plugin equalizer, to cut frequencies below 500 Hz and above 2 KHz.

Leave all faders, of all buses to zero, including the output fader. If you have some RMS meter (as the TT Dynamic Range or IXL Inspector), you could clearly verify that the RMS of the generated Pink Noise is of -20 dB.

But... all this for what?.

Nice question.

83 dB SPL is the standard level used in cinema.

If you have your volume control with the zero mark and, such a zero mark corresponds to 83 dB SPL (of the Pink Noise described above), anything that you mix in this position of your volume control and, that you are satisfied with its sound, will achieve it without clippings, overs or similar.

If you achieve a nice mix in that control position, you could masterize later the mix without issues and, it will be translated to any other kind of device or environment without the issues introduced by some A/D or D/A converters.

This calibration level corresponds to the use of the K-20 meter designed by Bob Katz.

To masterize or, when doing a model, we will usually compress the program between 6 to 8 decibels.

Repeat the described operation but, this time, move your volume control down until your meter reads 77 dB SPL. This corresponds to reduce the volume in 6 dB. Mark this position as -6.

You will usually masterize at this level, pop material or any other kind of music not so energetic.

This corresponds to the K-14 meter designed by Bob Katz.

Repeat once more the operation and, lower a bit more the volume, until your meter reads 75 dB SPL.

This corresponds to reduce the volume in 8 dB. Mark this position as -8.

You will masterize at this level, sonic material that requieres a high sonic impact, as rock.

It corresponds to K-12 meter of Bob Katz.

So nice, so good. Monitors calibrated... what else?

To mix, always use the mark 0.

You can check the mix at different volume levels, for sure but, if the mix sounds good in your mark 0, you should achieved a dynamic mix, without excessive compression and perfect for the masterizing phase. This mix can be the track of any film without needing to adjust anything else.

To masterize, you will move the control to the marks -6 or -8, depending on the type of material and destination (CD, broadcast, MP3, etc).

Theoretically, the use of such control marks avoids the need to work with meters, to control if the resulting sound remains between the proper levels, and you shouldn't be afraid of clipping or overs.

4. Control the mix with mono and dithering of 16 bits

If your Monitor's Control System, your Audio Card or any Meter plugin has a Mono button, put it on to check the goodness of your mix in mono.

The Mono mode is very useful to check the relative volume of all instruments that conform the mix.

It's also a good tool to check if there is some instruments with overlapping frequencies and, to check if the corrective equalization that we are applying to each instrument is effectively helping to better perceive each instrument individually.

One more thing that drove my crazy for a long time was the need to convert from a good mix in 24 bits to 16 bits (to test a master in a CD or to convert it to MP3).

Most of the material that was sounding good at 24 bits, started to sound confused, with excessive low content and with a clear lost of spatial information.

The Sonnox Limiter plugin, that I am using as a brickwall limiter, at the end of the mix bus, has an useful button that allows to hear the mix with dithering of 24 bits (the usual while mixing) or of 16 bits.

I've often discovered that a mix that sounds nice at 24 bits can sound really bad at 16 bits. This button is very useful to better adjust the compression level, amount of reverberation and rest of processing that you are doing to the whole mix.

When I think I am done, I always check the mix with dithering of 16 bits and, fix any issue, before bouncing the final material.

Practically every DAW comes with some plugin for dithering, that we can use at the of the masterizing chain.

Activate the dithering at 16 bits to check if your are overdoing your process.

When you are converting from a higher resolution (24 bits) to a lower one (16 bits), you are loosing 8 bits of information. Those trailing bits are responsible of the finest nuances of the sound and, often they have spatial information (reverberation, echoes, etc).

This lost of information makes the sound to be "quantized" or stepped, in a way the it sounds artificial and tasteless.

Dithering tries to get rid of such issue. Dithering consist in a mathematics algorithm that generates a noise of very low volume (few decibels), with different curves (types). The sum of such a noise and the truncated information when downgrading the bits, help to "reestablish the lost bits", in a way that the converted sound is closer to the original sound, with more resolution and detail than without applying the dithering.

Every time that we would bounce our mix at 16 bits (for CD, MP3, ...), we should apply the dithering. Results are easier to foreseen if, during our masterizing or mixing, we control the goodness of the mix in our project resolution (32 or 24 bits) as well as in the final bounce resolution (16 bits).

26 March 2013

21 March 2013

Home Studio: Mixing - Part 5

Introduction

In the previous blog entry we have introduced the concepts of sound dynamics and dynamic range. Now, we can start to talk about the tools that modify the dynamics of the sound, which are broadly used during mixing an masterizing tasks.

Compressor

Imagine that we did our mix (following the technique of the 3 dimensions, mentioned before) and we achieve some final volume similar to the picture below (click on the picture for full size).

We already discussed how this TT Dynamic Range meter works so, now, we should be able to understand this picture.

We can see that average RMS are around -27 dB, while peaks RMS are around -14 db, while the dynamic range is 12.5 and 10.5 dB, left and right channels, respectively.

Definitively, not a very commercial loudness. Our mix will sound very quiet, compared to any commercial mix.

We could simply move the fader control of the mix, raising the gain about 13.5 dB, to make those RMS to be placed around -13.5 dB, what will leave the mix with peaks RMS around -0.5 dB, more or less.

But, since we are talking about Peak RMS, some peaks will "overflow" that target Ceil of -0.5 dB, producing clipping. This can be solved with the use of a Limiter but, once again, we will clip the peaks, anyway (because the compression ratio of a limiter is infinite).

So, the natural solution would be to lower a bit the channel fader until we have no clipping anymore (that red light that lights from time to time), but this will lower again the average RMS of the program and, therefore, the loudness of our mix. So, we will achieve a nicely sounding mix (dynamic range enough) but, sounding clearly lower than the commercial mixes of our competitors.

The set of peaks of the attack phase of a track are being called transients. In a track or the whole mix, not all the peaks have the same height (volume). We could say that a very low percentage are the ones that "overflow" and, those are the ones that would force us to lower that fader, loosing the mix some strength.

The Compressor is a tool that "compresses" the peaks, that are over a certain loudness level, to make them suit a determined dynamic range. Even that this is its main function, it has several other functions, since it's able to alter the whole dynamic curve of the material being processed, accenting more those parts that we are interested on more.

Let see a compressor plugin, by example:

Not all compressors have same names for their controls, but all more or less (some have some more knob than others) have same basic controls.

The gain or make-up is a control that allows us to raise the overall loudness of every bit of the material before to start the compression task.

Following with the previous example:

Imagine that we would like to achieve a dynamic range of about 12 dB. We wanted to put our average RMS more or less around -12dB. Before starting to compress, we saw that the average RMS were around -27, therefore, we should raise everything 15 dB (27 - 12 = 15), so we would put that gain control on +15 dB.

If our Ceil was set at -0.5 dB, to avoid clipping and overs when translating the mix to lower resolutions (16 bits, MP3, etc), by raising the average RMS to -12 dB, we will have a dynamic range reduced to 11.5 dB and, the average peaks will "overflow".

The control named threshold determines which is the minimum loudness level that the sound must reach for that compressor to start compressing the peaks. Everything that falls below the threshold level remains uncompressed and with a higher gain (determined by the gain or makeup control) but, everything that is over the threshold will be compressed. How it will be compressed depends on the rest of controls that we are going to describe.

To determine the threshold loudness, the better is try and fail. If the threshold was very low, we will compress practically everything. If the threshold was very high, we will compress just the higher peaks.

The correct threshold is that loudness were the interesting things are happening in our mix. If we raise or lower the threshold we will notice that radically changes the result, the accent of the song. Therefore, it is of high importance to exactly determine that threshold value to give to our mix the exact accent we wanted to achieve.

The control named attack determines how long should the compressor to wait, from the exact instant that a signal crossed the threshold level until it starts to compress such a signal. If we lower the attack, the compressor works earlier, lowering every single peak. Overdone, we can lose the percussive characteristic of our mix.

With high attack settings, we will allow the most of transients to cross the threshold. We will retain the punch but, we will go back to the initial issue: some peaks will overflow.

Normally, we need short attacks to tame the peaks (by example excessive percussive sounds as a bass guitar, kick drum or snare) and, we use longer attacks to maintain the punch in tracks with weak punch.

The release control takes care of the last phase of the ADSR curve of the compression task. When we reach the threshold level, and the time established with the attack is over, the compression will take place and, this compression will take the time determined by the release control. Therefore, long releases add sustain to the material, while short releases allow to work just in the starting parts of the sound (attack phase). To give more sustain to a weak bass guitar, or to give it some density, we usually raise the release time.

The combination of attack and release controls, define how the peaks are being tamed and, they are able to give some punch or to smooth the material.

The Sonnox Dynamics compressor has one more additional control, named hold that works before the release time, in an analog way as we already discussed when we introduced the ADSHR curves. This control isn't so usual in other compressors.

Finally, we have the ratio of compression, that determines the proportion to which the original loudness will be reduced. In this image, we see a ratio o 3.02:1, that means that any peak over the threshold level will reduce its loudness 3.02 times. So, the excessive 3.02 dB will be converted to just 1.0 dB, 6.04 dB will be reduced to 2 dB, and so on.

Some compressors include some algorithms that handle in different ways the transition area around the threshold, often named the "knee" of the compression curve. You can see in the above picture, that for this example, we are using a soft 5 dB knee, which smooths nicely the compression effect in this transition area.

Even that the Compression concept seems to be unique, the way as it is being implemented by each designer is always different. Not all compressor react at the same speed, some introduce their own fingerprint to the sound (and very specially, tube compressors).

There are some mythical compressors, like the UA 1175 or the LA-2A (used in drum kits and bass guitars, by example), that clearly add some color to the signal. The Fairchild 670 (used in electric guitar tracks, by example) is also coloring the sound. There are many other mythical compressors, usually forming part of a famous channel strip or mixing board.

Optocompressors (with optical cells) are often used for vocals, because they have a faster reaction time.

At the end, there are several types of compressors, that react very differently. Some seem to work better for certain instrument tracks, while other are more universal.

For every track, try as many compressors as you have and, choose the one that brings to you the most satisfactory result for your needs.

In this last picture, we can see the results of the compressor, in the TT Dynamic Range meter.

We achieve to raise our average RMS around -12 dB (as we wanted).

We balanced both channels (-12.7 and -12.5, against the initial -12.5 and -10.5).

We lowered the peaks up to -0.2 dB.

And, we achieved a dynamic range of 11.7 and 12.1 dB (very close to our target 12 dB).

For sure, those numbers say nothing if we don't hear and like the resulting compression effect, but they help us to understand how are we using the compressor and, if we are overdoing or underdoing the effect, while achieving a reasonable dynamic range and, removing the excessive overflowing peaks.

The Limiter

The Limiter is a particular case o a compressor, which compression ratio takes an infinite value. As we saw above, one compressor can allow to some instantaneous peaks to escape (to maintain the punch) but, in our final mix, we need that no peaks can produce a digital clipping, to avoid introducing distortion in our final mix.

The limiter fixes a Ceil, or the maximum loudness that every single bit of sound can reach and, any signal exceeding such a Ceil, will be lowered up to the Ceil value.

As it is an specialized compressor, it shares great part of their functions (control knobs).

The input gain is the same gain or make-up control already discussed. We already saw the attack and release controls and the knee.

The most characteristic value of a limiter is the output level or Ceil. Any signal over such a value will be lowered down up to the Ceil value.

Simplifying a lot things, when there are two or more peaks in the same row, with same volume, overs and overs will occur when we transport the digital mix to other equipment or audio processors.

Many limiters aren't able to recognize such an overs and to react accordingly (by example, lowering more one of the three peaks in a row with same volume).

Brickwall Limiters have often special algorithms that analyze the input material with some advance (look ahead) to introduce the right corrections to avoid such an issues.

The limiter in the below picture has some other functions that we aren't going to discuss here, because they are uncommon to most of limiters, with the exception of the auto-gain control, that can be found in more cases.

Decompressors o expanders

The expanders are dynamics tools that try to do the inverse work to a compressor.

If the function of a compressor is to raise the average loudness, compressing the dynamic range; the expander tries to increase the dynamic range, lowering the average loudness and, increasing the difference between Peaks RMS and average RMS.

They are used often as an attempt to return back some dynamics to a material that was previously compressed in excess and, they are being used in that way just before applying a softer compression to the material.

Probably, expanders are more habitual during masterizing, by example, to decompress a recording that passed through the channel strip of a mixing board and, that resulted in an excessive compression.

Its uses can seem a tad arcane. First, you want to control compressors, before you can understand how to correctly decompress.

Gates

If the Limiter established the maximum Ceil for loudness, the Gate establishes the minimum floor.

The Gate ensures that only those signals with a loudness over its threshold will be accepted (they cross the gate).

This concept seems very good to, by example, avoid the floor noises of the amp to cross the gate but, the real thing is that a perfect control of a Gate is a real headache.

Shares with a compressor the threshold, the attack (time that the signal must be over the threshold to open the door) and the release (time that the gate will stay open when the sound falls below the threshold).

Tweaking such a controls is a delicate task and, you can even ruin the track, if the softer passages are very close to the floor noise.

But gates are being used for more creative things also. By example, to control the amount of snare "pap" that you send to the reverberation effect. We can control that just the loudest beats go to reverberation, avoiding that the quietest parts could create unnecessary echoes. This can help also to better define the beat.

Transient modifiers

They take nice names as transient modelers, transient designers among other similar names.

Their main goal is to alter just the transients (attack) of the material, without affecting the rest of the ADSR curve. They can reduce (without clipping) or enhance the peaks of the attack phase, increasing or reducing the punch of the sound.

As we have seen, a compressor could leave some peaks to escape, to maintain the punch. The transient modifiers are able to modify their volume and duration in time.

We will see again some controls, as the threshold, gain and ratio but, this time, the compression effect will be oriented to just the transients, not to the whole signal.

De-Esser

A de-esser is a ultra-specialized compressor. Instead of working over the whole material, it works over a very narrow band of frequencies. By default, they are programmed to work over the range of frequencies where the human voice produces that sibilant sound.

The goal is to detect such an "s" and to compress them, reducing their loudness, making them smoothly sounding.

Most of the channel strips in the professional studio mixing boards, or in professional preamps dedicated to vocals, can include a de-esser module.

Even that they were designed to get rid of sibilant sounds, their particular ability to compress just certain range of frequencies, make them very useful to accurately act over certain ugly noises (fret noise in bass guitars, by example), reducing their impact without heavily affecting the rest of the material.

Other dynamics tools

They will be always very specializes versions of a compressor.

There are multi-band compressors, that differentiate the material in 3 or more ranges of frequencies, allowing individual compression control for each range.

Other compressors will work over the stereo image, dividing the signal into ghost center and side signals, allowing individual compression to each part.

RNDigital has compressors even more Gothic.

The Dynamizer is some kind of multi-band compressor but, instead of to divide the material in several bands of frequencies, it works dividing the material in several bands of loudness, instead. So, we can compress very differently the quieter and the louder parts. It's a very interesting concept.

At the end, any tool able to alter the sound dynamic or its dynamic range is a dynamics tool.

To be continued...

Well, I think I've covered mostly everything.

One of the darkest areas of mixing is the use of dynamics tools and, I guess I was of help in this area.

As most of the dynamics tools are just specialized compressors, to clearly understand how any usual compressor works will help you to understand how those specialized versions work.

I think i will end this set of entries with some tricks to enhance your mixes, in the next entry. Stay tuned.

In the previous blog entry we have introduced the concepts of sound dynamics and dynamic range. Now, we can start to talk about the tools that modify the dynamics of the sound, which are broadly used during mixing an masterizing tasks.

Compressor

Imagine that we did our mix (following the technique of the 3 dimensions, mentioned before) and we achieve some final volume similar to the picture below (click on the picture for full size).

We already discussed how this TT Dynamic Range meter works so, now, we should be able to understand this picture.

We can see that average RMS are around -27 dB, while peaks RMS are around -14 db, while the dynamic range is 12.5 and 10.5 dB, left and right channels, respectively.

Definitively, not a very commercial loudness. Our mix will sound very quiet, compared to any commercial mix.

We could simply move the fader control of the mix, raising the gain about 13.5 dB, to make those RMS to be placed around -13.5 dB, what will leave the mix with peaks RMS around -0.5 dB, more or less.

But, since we are talking about Peak RMS, some peaks will "overflow" that target Ceil of -0.5 dB, producing clipping. This can be solved with the use of a Limiter but, once again, we will clip the peaks, anyway (because the compression ratio of a limiter is infinite).

So, the natural solution would be to lower a bit the channel fader until we have no clipping anymore (that red light that lights from time to time), but this will lower again the average RMS of the program and, therefore, the loudness of our mix. So, we will achieve a nicely sounding mix (dynamic range enough) but, sounding clearly lower than the commercial mixes of our competitors.

The set of peaks of the attack phase of a track are being called transients. In a track or the whole mix, not all the peaks have the same height (volume). We could say that a very low percentage are the ones that "overflow" and, those are the ones that would force us to lower that fader, loosing the mix some strength.

The Compressor is a tool that "compresses" the peaks, that are over a certain loudness level, to make them suit a determined dynamic range. Even that this is its main function, it has several other functions, since it's able to alter the whole dynamic curve of the material being processed, accenting more those parts that we are interested on more.

Let see a compressor plugin, by example:

Not all compressors have same names for their controls, but all more or less (some have some more knob than others) have same basic controls.

The gain or make-up is a control that allows us to raise the overall loudness of every bit of the material before to start the compression task.

Following with the previous example:

Imagine that we would like to achieve a dynamic range of about 12 dB. We wanted to put our average RMS more or less around -12dB. Before starting to compress, we saw that the average RMS were around -27, therefore, we should raise everything 15 dB (27 - 12 = 15), so we would put that gain control on +15 dB.

If our Ceil was set at -0.5 dB, to avoid clipping and overs when translating the mix to lower resolutions (16 bits, MP3, etc), by raising the average RMS to -12 dB, we will have a dynamic range reduced to 11.5 dB and, the average peaks will "overflow".

The control named threshold determines which is the minimum loudness level that the sound must reach for that compressor to start compressing the peaks. Everything that falls below the threshold level remains uncompressed and with a higher gain (determined by the gain or makeup control) but, everything that is over the threshold will be compressed. How it will be compressed depends on the rest of controls that we are going to describe.

To determine the threshold loudness, the better is try and fail. If the threshold was very low, we will compress practically everything. If the threshold was very high, we will compress just the higher peaks.

The correct threshold is that loudness were the interesting things are happening in our mix. If we raise or lower the threshold we will notice that radically changes the result, the accent of the song. Therefore, it is of high importance to exactly determine that threshold value to give to our mix the exact accent we wanted to achieve.

The control named attack determines how long should the compressor to wait, from the exact instant that a signal crossed the threshold level until it starts to compress such a signal. If we lower the attack, the compressor works earlier, lowering every single peak. Overdone, we can lose the percussive characteristic of our mix.

With high attack settings, we will allow the most of transients to cross the threshold. We will retain the punch but, we will go back to the initial issue: some peaks will overflow.

Normally, we need short attacks to tame the peaks (by example excessive percussive sounds as a bass guitar, kick drum or snare) and, we use longer attacks to maintain the punch in tracks with weak punch.

The release control takes care of the last phase of the ADSR curve of the compression task. When we reach the threshold level, and the time established with the attack is over, the compression will take place and, this compression will take the time determined by the release control. Therefore, long releases add sustain to the material, while short releases allow to work just in the starting parts of the sound (attack phase). To give more sustain to a weak bass guitar, or to give it some density, we usually raise the release time.

The combination of attack and release controls, define how the peaks are being tamed and, they are able to give some punch or to smooth the material.

The Sonnox Dynamics compressor has one more additional control, named hold that works before the release time, in an analog way as we already discussed when we introduced the ADSHR curves. This control isn't so usual in other compressors.

Finally, we have the ratio of compression, that determines the proportion to which the original loudness will be reduced. In this image, we see a ratio o 3.02:1, that means that any peak over the threshold level will reduce its loudness 3.02 times. So, the excessive 3.02 dB will be converted to just 1.0 dB, 6.04 dB will be reduced to 2 dB, and so on.

Some compressors include some algorithms that handle in different ways the transition area around the threshold, often named the "knee" of the compression curve. You can see in the above picture, that for this example, we are using a soft 5 dB knee, which smooths nicely the compression effect in this transition area.

Even that the Compression concept seems to be unique, the way as it is being implemented by each designer is always different. Not all compressor react at the same speed, some introduce their own fingerprint to the sound (and very specially, tube compressors).

There are some mythical compressors, like the UA 1175 or the LA-2A (used in drum kits and bass guitars, by example), that clearly add some color to the signal. The Fairchild 670 (used in electric guitar tracks, by example) is also coloring the sound. There are many other mythical compressors, usually forming part of a famous channel strip or mixing board.

Optocompressors (with optical cells) are often used for vocals, because they have a faster reaction time.

At the end, there are several types of compressors, that react very differently. Some seem to work better for certain instrument tracks, while other are more universal.

For every track, try as many compressors as you have and, choose the one that brings to you the most satisfactory result for your needs.

In this last picture, we can see the results of the compressor, in the TT Dynamic Range meter.

We achieve to raise our average RMS around -12 dB (as we wanted).

We balanced both channels (-12.7 and -12.5, against the initial -12.5 and -10.5).

We lowered the peaks up to -0.2 dB.

And, we achieved a dynamic range of 11.7 and 12.1 dB (very close to our target 12 dB).

For sure, those numbers say nothing if we don't hear and like the resulting compression effect, but they help us to understand how are we using the compressor and, if we are overdoing or underdoing the effect, while achieving a reasonable dynamic range and, removing the excessive overflowing peaks.

The Limiter

The Limiter is a particular case o a compressor, which compression ratio takes an infinite value. As we saw above, one compressor can allow to some instantaneous peaks to escape (to maintain the punch) but, in our final mix, we need that no peaks can produce a digital clipping, to avoid introducing distortion in our final mix.

The limiter fixes a Ceil, or the maximum loudness that every single bit of sound can reach and, any signal exceeding such a Ceil, will be lowered up to the Ceil value.

As it is an specialized compressor, it shares great part of their functions (control knobs).

The input gain is the same gain or make-up control already discussed. We already saw the attack and release controls and the knee.

The most characteristic value of a limiter is the output level or Ceil. Any signal over such a value will be lowered down up to the Ceil value.

Simplifying a lot things, when there are two or more peaks in the same row, with same volume, overs and overs will occur when we transport the digital mix to other equipment or audio processors.

Many limiters aren't able to recognize such an overs and to react accordingly (by example, lowering more one of the three peaks in a row with same volume).

Brickwall Limiters have often special algorithms that analyze the input material with some advance (look ahead) to introduce the right corrections to avoid such an issues.

The limiter in the below picture has some other functions that we aren't going to discuss here, because they are uncommon to most of limiters, with the exception of the auto-gain control, that can be found in more cases.

Decompressors o expanders

The expanders are dynamics tools that try to do the inverse work to a compressor.

If the function of a compressor is to raise the average loudness, compressing the dynamic range; the expander tries to increase the dynamic range, lowering the average loudness and, increasing the difference between Peaks RMS and average RMS.

They are used often as an attempt to return back some dynamics to a material that was previously compressed in excess and, they are being used in that way just before applying a softer compression to the material.

Probably, expanders are more habitual during masterizing, by example, to decompress a recording that passed through the channel strip of a mixing board and, that resulted in an excessive compression.

Its uses can seem a tad arcane. First, you want to control compressors, before you can understand how to correctly decompress.

Gates

If the Limiter established the maximum Ceil for loudness, the Gate establishes the minimum floor.

The Gate ensures that only those signals with a loudness over its threshold will be accepted (they cross the gate).

This concept seems very good to, by example, avoid the floor noises of the amp to cross the gate but, the real thing is that a perfect control of a Gate is a real headache.

Shares with a compressor the threshold, the attack (time that the signal must be over the threshold to open the door) and the release (time that the gate will stay open when the sound falls below the threshold).

Tweaking such a controls is a delicate task and, you can even ruin the track, if the softer passages are very close to the floor noise.

But gates are being used for more creative things also. By example, to control the amount of snare "pap" that you send to the reverberation effect. We can control that just the loudest beats go to reverberation, avoiding that the quietest parts could create unnecessary echoes. This can help also to better define the beat.

Transient modifiers

They take nice names as transient modelers, transient designers among other similar names.

Their main goal is to alter just the transients (attack) of the material, without affecting the rest of the ADSR curve. They can reduce (without clipping) or enhance the peaks of the attack phase, increasing or reducing the punch of the sound.

As we have seen, a compressor could leave some peaks to escape, to maintain the punch. The transient modifiers are able to modify their volume and duration in time.

We will see again some controls, as the threshold, gain and ratio but, this time, the compression effect will be oriented to just the transients, not to the whole signal.

De-Esser

A de-esser is a ultra-specialized compressor. Instead of working over the whole material, it works over a very narrow band of frequencies. By default, they are programmed to work over the range of frequencies where the human voice produces that sibilant sound.

The goal is to detect such an "s" and to compress them, reducing their loudness, making them smoothly sounding.

Most of the channel strips in the professional studio mixing boards, or in professional preamps dedicated to vocals, can include a de-esser module.

Even that they were designed to get rid of sibilant sounds, their particular ability to compress just certain range of frequencies, make them very useful to accurately act over certain ugly noises (fret noise in bass guitars, by example), reducing their impact without heavily affecting the rest of the material.

Other dynamics tools

They will be always very specializes versions of a compressor.

There are multi-band compressors, that differentiate the material in 3 or more ranges of frequencies, allowing individual compression control for each range.

Other compressors will work over the stereo image, dividing the signal into ghost center and side signals, allowing individual compression to each part.

RNDigital has compressors even more Gothic.

The Dynamizer is some kind of multi-band compressor but, instead of to divide the material in several bands of frequencies, it works dividing the material in several bands of loudness, instead. So, we can compress very differently the quieter and the louder parts. It's a very interesting concept.

At the end, any tool able to alter the sound dynamic or its dynamic range is a dynamics tool.

To be continued...

Well, I think I've covered mostly everything.

One of the darkest areas of mixing is the use of dynamics tools and, I guess I was of help in this area.

As most of the dynamics tools are just specialized compressors, to clearly understand how any usual compressor works will help you to understand how those specialized versions work.

I think i will end this set of entries with some tricks to enhance your mixes, in the next entry. Stay tuned.

19 March 2013

Pedals: Dry Bell's Vibe Machine - First contact

Introduction

Yes, I love Vibe pedals but, I hate every issue that such a kind of pedals bring with them.

First, the size. A correct univibe pedal, with optical leds and all the candy are big and, take lot of room in your pedal board.

Second, the tone sucking. Since vibe pedals were designed more for keyboards than guitars, they had input and output impedances not so appropriate for electric guitars.

This is my fifth vibe pedal and, I've owned very good ones. Previously, I had a Voodoo Lab Vibe, a Roger Mayer Voodoo Vi be+, a Fulltone MDV2 and a Lovepedal Picklevibe.

The Voodoo Lab has a very close vintage sound (just the chorus side of the Univibe) but, it tends to be lost in the mix.

The Roger Mayer is a sophisticated vibe, with a more studio-like sound and, lot of tweakability but, it's big as a hell and, it has more controls than a plane.

The Fulltone MDV2, includes both, the vintage and modern sounds but, to me, it has same impedance issues as most of Fulltone's pedals and, the speed (or depth) depends on the rocket position and, since you have to push the on/off button by moving the rocket, you lost your setting every time that you switch it on or off.

The Lovepedal Picklevibe, isn't based in the real Univibe effect. It goes closer and, it's very easy to use, since it has a single knob but, this is also a limitation, since you cannot easily modify the depth of the effect. It takes the less room in your pedal board of any vibes, any way, giving you an usable sound.

I wasn't expecting to buy a new pedal for a long, long time, once my pedalboard has been wamplerized but, when I saw the demo of Brett Kingman (as interesting as always), I felt in love with such a pedal.

It had everything I dreamed in a vibe pedal.

So, here we are. I had the vibe for a while but, the instability of my mains made me to wait until having power enough to perform my tests. Otherwise, neither the amp, neither pedals sound reasonably good.

This pedal is the only pedal made by Dry Bell, in Croatia and, to my understanding, this is the best available vibe pedal nowadays but, it has a price tag that will avoid lot of people to enjoy it. It's a pity.

Presentation

The pedal comes inside a white carton box, that includes inside the real pedal box.

The pedal comes wrapped in bubble plastic and, the user's manual is just a single sheet with all the information you need to run such a pedal.

Together with the pedal, they are giving you a pick-holder and, a hand-written note thanking you for buying its pedal.

I would say, this is one of the more professional pedals that I've ever seen. It has anything you can imagine a vibe should have and, everything is packet in the size of a Boss pedal (even smaller than my Wampler pedals).

When you open the pedal case, all the room is filled with the circuit board so, the drawback is that there is no room for a battery. You need to run this pedal with the help of an AC adaptor, being able to work between 9V to 16V.

This pedal drains a lot of current (85 mA maximum) so, be sure that you feed it up with a proper output of your power brick. I had to balance all pedals between two Voodoo Lab Pedal Power 2 units and, the Vibe is running in output 5 (output 5 and 6 can deliver a maximum of 250 mA, while outputs 1 to 4 and 7-8 deliver a maximum of 100 mA).

Testing it

The pedal has two internal jumpers.

The first one corresponds to an output buffer, that comes activated from factory.

The second one corresponds to control of an external expression pedal, that comes deactivated from factory.

I've first deactivated the output buffer, just to check how it worked. Results weren't so satisfactory, since the pedal became a real tone sucker. With the output buffer and input buffer off, this pedal mimes the impedance issues of any vintage vibe and, depending on the rest of your pedal board, it can be a real mess.

I didn't liked it when stacked with my overdrives, distortion or fuzz.

So, I've opened again the unit and, set the jumper as default (output buffer on).

This doesn't seem to alter the sound of the pedal itself and, helps a lot to the rest of the chain of pedals.

This pedal comes with a switch (on its front) with two modes: original and bright.

The original mode has an impedance level similar to the original Univibe, while the bright mode switches on an input buffer.

As I was using the Wampler Decibel+ buffer/booster, the effect of the bright mode is very subtle but, I guess, for people that doesn't loads a buffer in it's pedal board, this mode (together with the output buffer) will be of great help to place the vibe in his/her pedal chain without negatively impact the rest of pedals.

The pedal comes with the two modes of the Univibe pedal: Chorus and Vibrato.

I've just checked the Chorus mode, that corresponds to the mythical Univibe sound. I would probably check the vibrato mode some other day. I wasn't so interested on such a mode.

The Voodoo Lab vibe was implementing just that mode, as well as the Picklevibe.

The Roger Mayer Voodoo Vibe+ has both (even more than two!!!) , as well as the Fulltone's unit.

Anyway, with the Decibel+ buffer running at the beginning of the chain and, the output buffer of the Vibe Machine on, the sound was absolutely awesome.

This unit has some trim pots on its sides, that can be tweaked to exactly dial the right volume and symmetry of the wave, among other settings for an external expression pedal.

I didn't feel myself as needing to tweak such a trim pots, since the sound I was achieving with the factory settings was good enough.

This symmetry knob is one of the characteristics that I've already seen in Roger's Mayer Voodoo Vibe+.

For better results, the booster section of the Decibel+ should be set up at low settings. Not because of the vibe itself but, because when it stacks into gain pedals, the sound can go a bit confused and undefined.

If you run a booster at the beginning, be sure to tweak it while the vibe is stacked in a hard-clipping pedal (as a fuzz).

Anyway, any potential impedance issue can be solved with the help of the output and input buffers, while maintaining a good vibe sound and, that's simply awesome. Good job!.

The Video

I've recorded a video with my first contact with this pedal.

I know the pedal isn't sounding alone in any case. At least, the buffer/booster (Decibel+) and the delay (Tape Echo) are always on.

But, a pedal that doesn't integrates in my pedal board interest me nothing.

I like to test pedals in their real environment, and not just alone, where they can sound awesome but, produce some impedance issues when stacked with the rest of the pedal board.

Just take this into account.

My impressions

This is one of the best Vibe clones, in the same ballpark of the Roger Mayer Voodoo Vibe+ or the Fulltone MDV2, but the only one sized as a Boss-pedal. Only the Lovepedal Pickle Vibe is smaller than this one but, with it's very limited.

It has anything you can find summing up all vibe pedals, including: switchable input and output buffers, input for an expression pedal, input for an external commandment pedal and trim pots for effect volume and symmetry. And, the two original Univibe modes: chorus and vibrato.

The switchable output and input buffers allow this pedal to be stacked in your pedal board with ease. The output buffer (on by default) affects really not to the original Vibe sound and, avoids sucking the tone to the rest of pedals. The input buffer (bright mode) affects more to the original sound and, has practically no effect if you are already running a buffer or buffered pedal before the Vibe.

Without any doubt, this is the more interesting pedal I've seen in many time, it's really pedal board friendly.

On the negative side, you cannot run this pedal by batteries, since there is no physical room inside the box for a battery and, it drains lot of current (maximum, 85 mA) so, be sure to check the maximum current that your power brick can give and try to balance pedals along the different outputs or, just run a dedicated AC adaptor for this unit.

But, honestly, I prefer to sacriffy the battery and have more room in my pedal board so, to me, this is not a real issue.

The real issue with this pedal is its price tag, that's really high. Since it's being produced in Croatia (out of the European Community), you have to pay the extra Customs fees, along with the shipping costs, what puts the final price of this pedal on the Stratosphere.

Dear boys of Bry Bell, you've done an impressive pedal, just what the doctor recommended to any vibe lover but, with that price, you are avoiding your pedal to be spread to practically every pedal board.

Can you please reconsider your price tag?.

If you do, I bet your pedal will rule vibe's world!.

Yes, I love Vibe pedals but, I hate every issue that such a kind of pedals bring with them.

First, the size. A correct univibe pedal, with optical leds and all the candy are big and, take lot of room in your pedal board.

Second, the tone sucking. Since vibe pedals were designed more for keyboards than guitars, they had input and output impedances not so appropriate for electric guitars.

This is my fifth vibe pedal and, I've owned very good ones. Previously, I had a Voodoo Lab Vibe, a Roger Mayer Voodoo Vi be+, a Fulltone MDV2 and a Lovepedal Picklevibe.

The Voodoo Lab has a very close vintage sound (just the chorus side of the Univibe) but, it tends to be lost in the mix.

The Roger Mayer is a sophisticated vibe, with a more studio-like sound and, lot of tweakability but, it's big as a hell and, it has more controls than a plane.

The Fulltone MDV2, includes both, the vintage and modern sounds but, to me, it has same impedance issues as most of Fulltone's pedals and, the speed (or depth) depends on the rocket position and, since you have to push the on/off button by moving the rocket, you lost your setting every time that you switch it on or off.

The Lovepedal Picklevibe, isn't based in the real Univibe effect. It goes closer and, it's very easy to use, since it has a single knob but, this is also a limitation, since you cannot easily modify the depth of the effect. It takes the less room in your pedal board of any vibes, any way, giving you an usable sound.

I wasn't expecting to buy a new pedal for a long, long time, once my pedalboard has been wamplerized but, when I saw the demo of Brett Kingman (as interesting as always), I felt in love with such a pedal.

It had everything I dreamed in a vibe pedal.

So, here we are. I had the vibe for a while but, the instability of my mains made me to wait until having power enough to perform my tests. Otherwise, neither the amp, neither pedals sound reasonably good.

This pedal is the only pedal made by Dry Bell, in Croatia and, to my understanding, this is the best available vibe pedal nowadays but, it has a price tag that will avoid lot of people to enjoy it. It's a pity.

Presentation

The pedal comes inside a white carton box, that includes inside the real pedal box.

The pedal comes wrapped in bubble plastic and, the user's manual is just a single sheet with all the information you need to run such a pedal.

Together with the pedal, they are giving you a pick-holder and, a hand-written note thanking you for buying its pedal.

I would say, this is one of the more professional pedals that I've ever seen. It has anything you can imagine a vibe should have and, everything is packet in the size of a Boss pedal (even smaller than my Wampler pedals).

When you open the pedal case, all the room is filled with the circuit board so, the drawback is that there is no room for a battery. You need to run this pedal with the help of an AC adaptor, being able to work between 9V to 16V.

This pedal drains a lot of current (85 mA maximum) so, be sure that you feed it up with a proper output of your power brick. I had to balance all pedals between two Voodoo Lab Pedal Power 2 units and, the Vibe is running in output 5 (output 5 and 6 can deliver a maximum of 250 mA, while outputs 1 to 4 and 7-8 deliver a maximum of 100 mA).

Testing it

The pedal has two internal jumpers.

The first one corresponds to an output buffer, that comes activated from factory.

The second one corresponds to control of an external expression pedal, that comes deactivated from factory.

I've first deactivated the output buffer, just to check how it worked. Results weren't so satisfactory, since the pedal became a real tone sucker. With the output buffer and input buffer off, this pedal mimes the impedance issues of any vintage vibe and, depending on the rest of your pedal board, it can be a real mess.

I didn't liked it when stacked with my overdrives, distortion or fuzz.

So, I've opened again the unit and, set the jumper as default (output buffer on).

This doesn't seem to alter the sound of the pedal itself and, helps a lot to the rest of the chain of pedals.

This pedal comes with a switch (on its front) with two modes: original and bright.

The original mode has an impedance level similar to the original Univibe, while the bright mode switches on an input buffer.

As I was using the Wampler Decibel+ buffer/booster, the effect of the bright mode is very subtle but, I guess, for people that doesn't loads a buffer in it's pedal board, this mode (together with the output buffer) will be of great help to place the vibe in his/her pedal chain without negatively impact the rest of pedals.

The pedal comes with the two modes of the Univibe pedal: Chorus and Vibrato.

I've just checked the Chorus mode, that corresponds to the mythical Univibe sound. I would probably check the vibrato mode some other day. I wasn't so interested on such a mode.

The Voodoo Lab vibe was implementing just that mode, as well as the Picklevibe.

The Roger Mayer Voodoo Vibe+ has both (even more than two!!!) , as well as the Fulltone's unit.

Anyway, with the Decibel+ buffer running at the beginning of the chain and, the output buffer of the Vibe Machine on, the sound was absolutely awesome.

This unit has some trim pots on its sides, that can be tweaked to exactly dial the right volume and symmetry of the wave, among other settings for an external expression pedal.

I didn't feel myself as needing to tweak such a trim pots, since the sound I was achieving with the factory settings was good enough.

This symmetry knob is one of the characteristics that I've already seen in Roger's Mayer Voodoo Vibe+.

For better results, the booster section of the Decibel+ should be set up at low settings. Not because of the vibe itself but, because when it stacks into gain pedals, the sound can go a bit confused and undefined.

If you run a booster at the beginning, be sure to tweak it while the vibe is stacked in a hard-clipping pedal (as a fuzz).

Anyway, any potential impedance issue can be solved with the help of the output and input buffers, while maintaining a good vibe sound and, that's simply awesome. Good job!.

The Video

I've recorded a video with my first contact with this pedal.

I know the pedal isn't sounding alone in any case. At least, the buffer/booster (Decibel+) and the delay (Tape Echo) are always on.

But, a pedal that doesn't integrates in my pedal board interest me nothing.

I like to test pedals in their real environment, and not just alone, where they can sound awesome but, produce some impedance issues when stacked with the rest of the pedal board.

Just take this into account.

My impressions

This is one of the best Vibe clones, in the same ballpark of the Roger Mayer Voodoo Vibe+ or the Fulltone MDV2, but the only one sized as a Boss-pedal. Only the Lovepedal Pickle Vibe is smaller than this one but, with it's very limited.

It has anything you can find summing up all vibe pedals, including: switchable input and output buffers, input for an expression pedal, input for an external commandment pedal and trim pots for effect volume and symmetry. And, the two original Univibe modes: chorus and vibrato.

The switchable output and input buffers allow this pedal to be stacked in your pedal board with ease. The output buffer (on by default) affects really not to the original Vibe sound and, avoids sucking the tone to the rest of pedals. The input buffer (bright mode) affects more to the original sound and, has practically no effect if you are already running a buffer or buffered pedal before the Vibe.

Without any doubt, this is the more interesting pedal I've seen in many time, it's really pedal board friendly.

On the negative side, you cannot run this pedal by batteries, since there is no physical room inside the box for a battery and, it drains lot of current (maximum, 85 mA) so, be sure to check the maximum current that your power brick can give and try to balance pedals along the different outputs or, just run a dedicated AC adaptor for this unit.

But, honestly, I prefer to sacriffy the battery and have more room in my pedal board so, to me, this is not a real issue.

The real issue with this pedal is its price tag, that's really high. Since it's being produced in Croatia (out of the European Community), you have to pay the extra Customs fees, along with the shipping costs, what puts the final price of this pedal on the Stratosphere.

Dear boys of Bry Bell, you've done an impressive pedal, just what the doctor recommended to any vibe lover but, with that price, you are avoiding your pedal to be spread to practically every pedal board.

Can you please reconsider your price tag?.

If you do, I bet your pedal will rule vibe's world!.

Home Studio: Mixing - Part 4

Introduction

We already reviewed the typical tasks of the mixing stage and, discussed about the 3 dimensions of mixing.

There are some techniques and tools destined to modify the sound dynamics and, that are broadly used, during the mixing phase and during the masterizing phase (but, in different ways).

Before talking about compressors, decompressors and limiters, we should first understand what the term "sound dynamics" mean and, the most important, what "dynamic range" means.

Sound dynamics

Even if two different instruments (by example, a guitar and a trumpet) play the same note, there is clear difference in the sound produced by each one. The fingerprint of each instrument is totally different.

Apart of the fundamental note, each instrument generates other frequencies of minor order (harmonics, overtones...), which content and intensity highly varies from instrument to instrument.

This characteristic fingerprint of each instrument is what is being called timber.

Apart of this relationship between the fundamental note, harmonics and overtones, we can analyze how the sonic wave evolute with the time. Probably the acronym ADSR (Attack, Decay, Sustain and Release) is familiar to you. Lately, we can even talk about ADSHR, if we include the Hold phase between Sustain and Release.

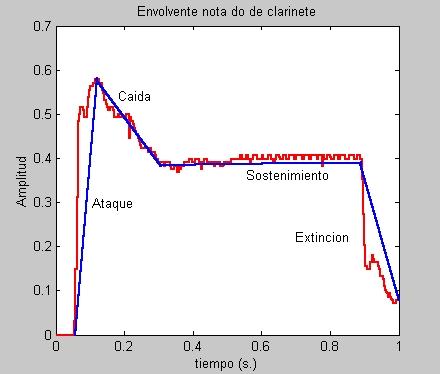

Next picture shows the different phases of a note C emitted by a clarinet.

Ataque = Attack

Caída = Decay

Sostenimiento = Sustain

Extinción = Release

Axis are: Volume (Amplitud) and Time (tiempo).

The particular relationship between Volume and Time is being called the Volume Envelope.

Apart of the timber, the way as the same note varies along the time is very different from instrument to instrument, also. Even the same instrument, depending on the interpretative intention, can have different curves for same note (by example, if we play the guitar with fingers or a pick, if we play harder or softer...). Even that the timber is the same, the ADSR curve is different.

The Attack phase is the ramp from the beginning of the sound until the sound reaches its higher volume. Is a very short time, where the volume goes from zero to maximum.

Once the maximum volume has been reached, the volume drops (Decay) until reaches certain level, that is being maintained for a while (Sustain). When we stop acting on the instrument, this sound starts to fade out (Release). Lately, a new phase was introduced (Hold), between Sustain and Release, because the sound drops quickly after the sustain but, it's maintained for a while in the same low volume before definitively fade out (release).

The time that each note played by each instrument stays in each of the ADSHR phases is what is known as the sound dynamics (the way it moves).

Compressors, Decompressors, Limiters, Gates and similar dynamics tools are all affecting to one or more parameters of that envelope, re-shaping the final 'look' of such a curve. The timber remains the same but, the sound dynamic is being modified.

Dynamic Range

This is a very important concept, as soon as we start to alter the sound dynamics during our musical production.

The maximum volume of a track is being known as a peak volume. During the reproduction of a track, there are certain instants of time where volume reaches peaks, way over the average volume level of the track.

Usually, the Attack phase of each note the responsible for such a peaks, while the average volume level is associated to the Sustain phase.

The loudness of the track is the perceived volume. Don't confuse loudness with volume. Volume is a measurable value (by example, decibels of sound pressure), while the perceived volume (or loudness) is a subjective value, that depends on the hearer and, isn't measurable.

Contrary to what you could think, what really determines the loudness is the Average Volume of the track and, not the peak volume.

The Average Volume is being measured with the RMS (Root Mean Square) method, a mathematical formula that calculates the average volume of an audio signal.

If, for a certain fragment (that can be all the track or song), the maximum peak volume is of +6dB and, during the same fragment we are having a RMS of -18dB, we will say that we have a Dynamic Range of 12 dB (abs(18) - abs(6) = 12 dB).

So, the Dynamic Range is the margin of decibels between the RMS and the peak value for a certain sound fragment.

To check the dynamic range of any part, track or complete mix, it's very useful to have some meter. I personally use the TT Dynamic Range Meter (see picture below).

If we have a look to the meter in the above picture, each channel is being measured separately (side bars in the meter). The narrower bar corresponds to the average values of the Peaks (Peak RMS), representing the value of the maximum peak with an horizontal red line; this value is being shown in the Peak boxes, above (-0.5 Left / -0.9 Right).

The bigger bar, close to the narrower one, represents average RMS and, the actual value is being represented on the bottom, in RMS boxes (-12.0 Left / -12.3 Right).

The two center bars are the current dynamic range of each channel (differences between RMS peaks and RMS averages).

Ok, Ok... what a nice meter but... all this for what?.

Be patient, here we go.

People that is currently ruling Masterizing are claiming that there is a loudness war in musical productions, to achieve a mix with the maximum loudness. The more we rise RMS, less difference between the average volume and peak volume. The sound becomes compressed and lacks expression, accents, forming a sonic wall of sound.

Then, which is an acceptable dynamic range?. It all depends on the destination of the musical production.

Without using compression, the sound generated by a philharmonic orchestra has a dynamic range between 20 and 24 dB.

For Classic Music and Cinema, Katz recommends a dynamic range of, at least, 20 dB.

For Pop and Radio, a range around 14 dB (Radio Broadcasters process each material, maximizing it to homogenize the volume of every thing they emit; this values ensures a good result after their processing).

For a harder musical style but, maintaining expressivity and dynamics, a range around 12 dB.

By example, recordings of Led Zeppelin, Jimi Hendrix and rest of the icons of rock of that era, have dynamic ranges between 10 and 11 dB (some songs a lesser of 8 dB, some songs a greater range of 12 dB). Those, I've been personally measured with the TT Dynamic Range tool.

Katz, among many others, consider that was during that epoch were the best recordings were made, reaching the loudness to the right level, while maintaining the quality and nuances of the sound.

For sure, the ear is the final judge but, it is true that I am always trying to achieve a dynamic range between 8 and 12 dB for hard rock and, I always take a look to the meter while working with compressors, to clearly see if I am overdoing the effect.

Alright, alright... but... this translates to what?.

When we are mixing, and very specially while masterizing, we are fixing a Ceil value close to 0 dB (by example, -0.5 dB), as the maximum peak value that our mix can reach. Note that values higher than -0.3 dB will produce clipping and overs during further digital processing in other equipment.

If our maximum peak (limiter Ceil) is of -0.5 dB and, we wanted to maintain a dynamic range of 11 dB, to properly represent our mix, our RMS should be around -11.5 dB. This is the maximum loudness level that we can offer.

We will see the importance about all this when we describe how an audio compressor and limiter work.

To easily control this aspect, Bob Katz designed the meters for his K-System (K-20, K-14 and K-12).

Depending on the destination of our mix (as mentioned above), your goal is a dynamic range of 20 dB (for Cinema), 14 dB (Radio, Pop) or 12 dB (harder music) and, therefore, you choose the corresponding meter.

Every time that your RMS are around the 0 mark and, every time that your Peak RMS aren't constantly in the red zone of the meter, you will achieve a powerful mix, with a good dynamic range.

For this purpose (and many others), I am personally using the IXL Inspector by RNDigital, that implements the 3 K-System meters (among others).

In the above picture, a track measured with a K-20 meter. You can see that RMS are in the red zone and, they are there constantly. This track would be very compressed if it was a Classic piece.

Let see same track analyzed thru a K-14 meter.

Now, we can see that the music isn't in the red zone but, they are constantly in the yellow zone.

This track would be adequate for pop but, it could be heard as excessively compressed if we send it to a Radio Broadcast.

Let see the same with a K-12 meter.

Even that the RMS are still on the yellow zone, they are way below the +4 dB limit (fortisimo) and, they reach such a zone very sporadically. The red zone is shortly reached in very punctual moments during the song. We are in a dynamic range very acceptable for a Rock mix.

If I rise the compressor gain, in the mix bus (exaggeratedly), we will see that the RMS are permanently in the Red zone.

This mix will sound really loud (because RMS are very close to peak values) but, it will sound very compressed and, probably tasteless (even that this is difficult with Sonnox plugins and more evident in other less sophisticated plugins).

To be continued ...

Before discussing about the dynamics tools, as compressors, limiters, decompressors and gates, I've considered as necessary to introduce the concepts of sound dynamics and dynamic range, because those are the aspects being modified by those tools.

Once those concepts are clear, we will see in a next entry how those tools affect the sound dynamics and dynamic range.

We already reviewed the typical tasks of the mixing stage and, discussed about the 3 dimensions of mixing.

There are some techniques and tools destined to modify the sound dynamics and, that are broadly used, during the mixing phase and during the masterizing phase (but, in different ways).

Before talking about compressors, decompressors and limiters, we should first understand what the term "sound dynamics" mean and, the most important, what "dynamic range" means.

Sound dynamics

Even if two different instruments (by example, a guitar and a trumpet) play the same note, there is clear difference in the sound produced by each one. The fingerprint of each instrument is totally different.

Apart of the fundamental note, each instrument generates other frequencies of minor order (harmonics, overtones...), which content and intensity highly varies from instrument to instrument.

This characteristic fingerprint of each instrument is what is being called timber.

Apart of this relationship between the fundamental note, harmonics and overtones, we can analyze how the sonic wave evolute with the time. Probably the acronym ADSR (Attack, Decay, Sustain and Release) is familiar to you. Lately, we can even talk about ADSHR, if we include the Hold phase between Sustain and Release.

Next picture shows the different phases of a note C emitted by a clarinet.

Ataque = Attack

Caída = Decay

Sostenimiento = Sustain

Extinción = Release

Axis are: Volume (Amplitud) and Time (tiempo).

The particular relationship between Volume and Time is being called the Volume Envelope.

Apart of the timber, the way as the same note varies along the time is very different from instrument to instrument, also. Even the same instrument, depending on the interpretative intention, can have different curves for same note (by example, if we play the guitar with fingers or a pick, if we play harder or softer...). Even that the timber is the same, the ADSR curve is different.

The Attack phase is the ramp from the beginning of the sound until the sound reaches its higher volume. Is a very short time, where the volume goes from zero to maximum.

Once the maximum volume has been reached, the volume drops (Decay) until reaches certain level, that is being maintained for a while (Sustain). When we stop acting on the instrument, this sound starts to fade out (Release). Lately, a new phase was introduced (Hold), between Sustain and Release, because the sound drops quickly after the sustain but, it's maintained for a while in the same low volume before definitively fade out (release).

The time that each note played by each instrument stays in each of the ADSHR phases is what is known as the sound dynamics (the way it moves).

Compressors, Decompressors, Limiters, Gates and similar dynamics tools are all affecting to one or more parameters of that envelope, re-shaping the final 'look' of such a curve. The timber remains the same but, the sound dynamic is being modified.

Dynamic Range

This is a very important concept, as soon as we start to alter the sound dynamics during our musical production.

The maximum volume of a track is being known as a peak volume. During the reproduction of a track, there are certain instants of time where volume reaches peaks, way over the average volume level of the track.

Usually, the Attack phase of each note the responsible for such a peaks, while the average volume level is associated to the Sustain phase.

The loudness of the track is the perceived volume. Don't confuse loudness with volume. Volume is a measurable value (by example, decibels of sound pressure), while the perceived volume (or loudness) is a subjective value, that depends on the hearer and, isn't measurable.

Contrary to what you could think, what really determines the loudness is the Average Volume of the track and, not the peak volume.

The Average Volume is being measured with the RMS (Root Mean Square) method, a mathematical formula that calculates the average volume of an audio signal.

If, for a certain fragment (that can be all the track or song), the maximum peak volume is of +6dB and, during the same fragment we are having a RMS of -18dB, we will say that we have a Dynamic Range of 12 dB (abs(18) - abs(6) = 12 dB).

So, the Dynamic Range is the margin of decibels between the RMS and the peak value for a certain sound fragment.

To check the dynamic range of any part, track or complete mix, it's very useful to have some meter. I personally use the TT Dynamic Range Meter (see picture below).

The bigger bar, close to the narrower one, represents average RMS and, the actual value is being represented on the bottom, in RMS boxes (-12.0 Left / -12.3 Right).

The two center bars are the current dynamic range of each channel (differences between RMS peaks and RMS averages).

Ok, Ok... what a nice meter but... all this for what?.

Be patient, here we go.

People that is currently ruling Masterizing are claiming that there is a loudness war in musical productions, to achieve a mix with the maximum loudness. The more we rise RMS, less difference between the average volume and peak volume. The sound becomes compressed and lacks expression, accents, forming a sonic wall of sound.

Then, which is an acceptable dynamic range?. It all depends on the destination of the musical production.

Without using compression, the sound generated by a philharmonic orchestra has a dynamic range between 20 and 24 dB.

For Classic Music and Cinema, Katz recommends a dynamic range of, at least, 20 dB.

For Pop and Radio, a range around 14 dB (Radio Broadcasters process each material, maximizing it to homogenize the volume of every thing they emit; this values ensures a good result after their processing).

For a harder musical style but, maintaining expressivity and dynamics, a range around 12 dB.

By example, recordings of Led Zeppelin, Jimi Hendrix and rest of the icons of rock of that era, have dynamic ranges between 10 and 11 dB (some songs a lesser of 8 dB, some songs a greater range of 12 dB). Those, I've been personally measured with the TT Dynamic Range tool.

Katz, among many others, consider that was during that epoch were the best recordings were made, reaching the loudness to the right level, while maintaining the quality and nuances of the sound.

For sure, the ear is the final judge but, it is true that I am always trying to achieve a dynamic range between 8 and 12 dB for hard rock and, I always take a look to the meter while working with compressors, to clearly see if I am overdoing the effect.

Alright, alright... but... this translates to what?.

When we are mixing, and very specially while masterizing, we are fixing a Ceil value close to 0 dB (by example, -0.5 dB), as the maximum peak value that our mix can reach. Note that values higher than -0.3 dB will produce clipping and overs during further digital processing in other equipment.

If our maximum peak (limiter Ceil) is of -0.5 dB and, we wanted to maintain a dynamic range of 11 dB, to properly represent our mix, our RMS should be around -11.5 dB. This is the maximum loudness level that we can offer.

We will see the importance about all this when we describe how an audio compressor and limiter work.

To easily control this aspect, Bob Katz designed the meters for his K-System (K-20, K-14 and K-12).

Depending on the destination of our mix (as mentioned above), your goal is a dynamic range of 20 dB (for Cinema), 14 dB (Radio, Pop) or 12 dB (harder music) and, therefore, you choose the corresponding meter.

Every time that your RMS are around the 0 mark and, every time that your Peak RMS aren't constantly in the red zone of the meter, you will achieve a powerful mix, with a good dynamic range.

For this purpose (and many others), I am personally using the IXL Inspector by RNDigital, that implements the 3 K-System meters (among others).

In the above picture, a track measured with a K-20 meter. You can see that RMS are in the red zone and, they are there constantly. This track would be very compressed if it was a Classic piece.

Let see same track analyzed thru a K-14 meter.

Now, we can see that the music isn't in the red zone but, they are constantly in the yellow zone.

This track would be adequate for pop but, it could be heard as excessively compressed if we send it to a Radio Broadcast.

Let see the same with a K-12 meter.

Even that the RMS are still on the yellow zone, they are way below the +4 dB limit (fortisimo) and, they reach such a zone very sporadically. The red zone is shortly reached in very punctual moments during the song. We are in a dynamic range very acceptable for a Rock mix.

If I rise the compressor gain, in the mix bus (exaggeratedly), we will see that the RMS are permanently in the Red zone.

This mix will sound really loud (because RMS are very close to peak values) but, it will sound very compressed and, probably tasteless (even that this is difficult with Sonnox plugins and more evident in other less sophisticated plugins).

To be continued ...